C# 《WinForm》简约版“音乐播放器”小项目

本文共 4910 字,大约阅读时间需要 16 分钟。

今天下午时分,学习了一个简单的音乐播放器,现在先下了给C#Winform萌新练练手!

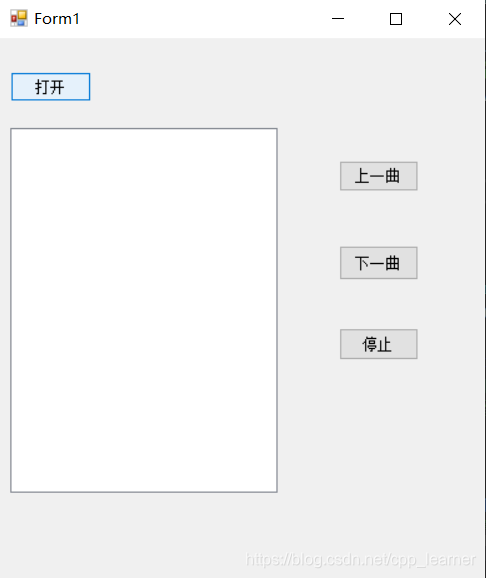

效果图:

需要使用到的命名空间:

using System.Collections.Generic; :泛型List using System.IO;:返回文件名和后缀名Path.GetFileName using System.Media;:播放.wav类型的音乐类SoundPlayer using System.Windows.Forms;:打开对话框OpenFileDialog -

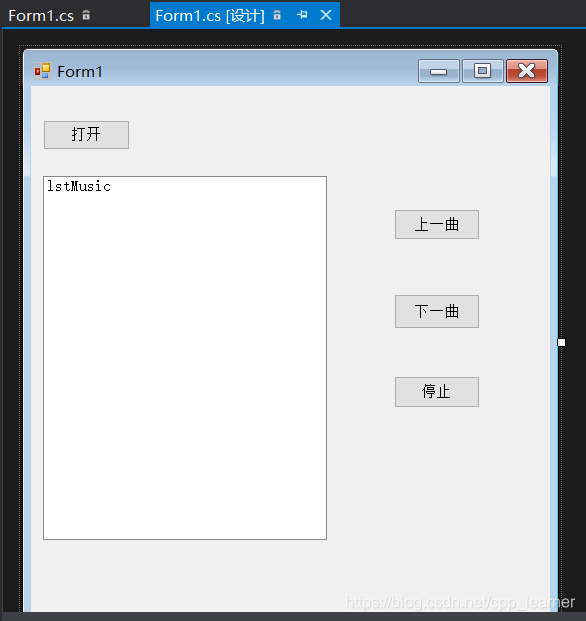

首先在设计页面托四个按钮Button和一个选择项列表框ListBox;

如下图: 打开按钮对象名:btnOpen 上一曲按钮对象名:btnMovePrevious 下一曲按钮对象名:btnMoveNext 停止按钮对象名:btnStop 选择项列表框对象名:lstMusic

打开按钮对象名:btnOpen 上一曲按钮对象名:btnMovePrevious 下一曲按钮对象名:btnMoveNext 停止按钮对象名:btnStop 选择项列表框对象名:lstMusic -

实现打开按钮

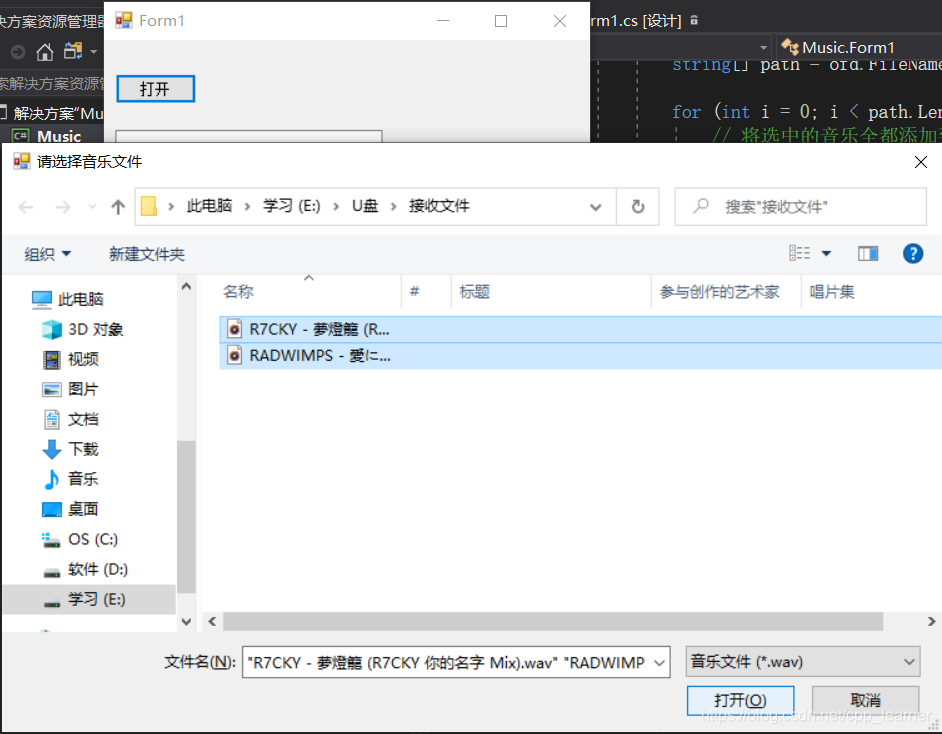

OpenFileDialog设置好打开对话框,并将选中的音乐添加到列表框中! List新建泛型,用于保存所选中的音乐名。

如下代码:(都有详细注释,自己看啦)

// 用于保存选中的音乐名Listlist = new List (); // 泛型(容器)private void btnOpen_Click(object sender, EventArgs e) { OpenFileDialog ofd = new OpenFileDialog(); // 打开对话框 ofd.Title = "请选择音乐文件"; ofd.InitialDirectory = @"E:\U盘\接收文件"; // 开打默认路径 ofd.Multiselect = true; // 允许多选文件 ofd.Filter = "音乐文件|*.wav|全部文件|*.*"; // 筛选 ofd.ShowDialog(); // 启动 // 获得我们在文件夹中选择所有文件的全路径 string[] path = ofd.FileNames; for (int i = 0; i < path.Length; i++) { // 将选中的音乐全都添加到listBox控件中 this.lstMusic.Items.Add(Path.GetFileName(path[i])); // 保存音乐名 list.Add(path[i]); }}

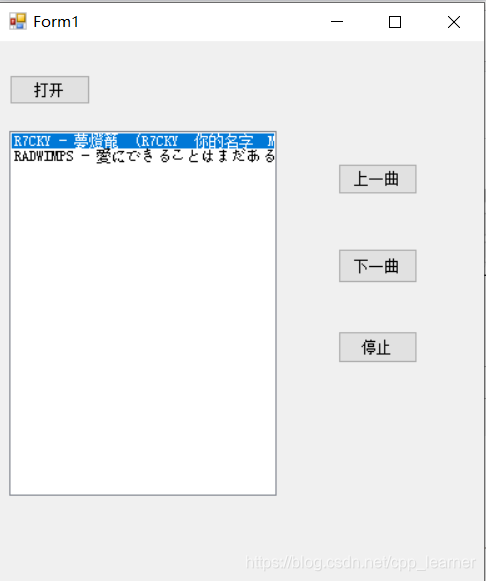

- 给ListBox绑定双击事件DoubleClick 实现双击音乐后开始播放音乐

如下代码:(都有详细注释,自己看啦)

// 实例化播放.wav后缀音乐的类SoundPlayer player = new SoundPlayer();private void lstMusic_DoubleClick(object sender, EventArgs e) { // 双击实现播放 player.SoundLocation = list[this.lstMusic.SelectedIndex]; // 将双击播放的音乐赋值 player.Load(); // 同步加载 player.Play(); // 开始播放} 完整第三步后,运行程序也可以播放音乐啦!

- 实现好下一曲,上一曲同理 首先获得当前选中的索引值,然后自增(记得防止越界)赋值给音乐对象的SoundLocation方法,最后Play()播放即可!

如下代码:(都有详细注释,自己看啦)

// 下一曲private void btnMoveNext_Click(object sender, EventArgs e) { // 获得当前歌曲选中的索引 int index = this.lstMusic.SelectedIndex; index++; // 索引自增,指向下一个 // 检测是否越界 if (index == this.lstMusic.Items.Count) { index = 0; } // 将改变后的索引重新的赋值给我们当前选中的索引项 this.lstMusic.SelectedIndex = index; // 赋值播放 player.SoundLocation = list[index]; player.Load(); // 同步加载声音 player.Play(); // 开始播放} 上一曲同理,代码如下:

// 上一曲private void btnMovePrevious_Click(object sender, EventArgs e) { int index = this.lstMusic.SelectedIndex; index--; if (index < 0) { index = this.lstMusic.Items.Count - 1; } this.lstMusic.SelectedIndex = index; player.SoundLocation = list[index]; player.Load(); player.Play();} - 停止按钮直接Stop()即可

// 停止private void btnStop_Click(object sender, EventArgs e) { player.Stop(); // 音乐停止} 好了,步骤也就这些吧!

全部代码展示:

using System;using System.Collections.Generic;using System.ComponentModel;using System.Data;using System.Drawing;using System.IO;using System.Linq;using System.Media;using System.Text;using System.Threading.Tasks;using System.Windows.Forms;namespace Music { public partial class Form1 : Form { public Form1() { InitializeComponent(); } // 用于保存选中的音乐名 List list = new List (); // 泛型(容器) private void btnOpen_Click(object sender, EventArgs e) { OpenFileDialog ofd = new OpenFileDialog(); // 打开对话框 ofd.Title = "请选择音乐文件"; ofd.InitialDirectory = @"E:\U盘\接收文件"; // 开打默认路径 ofd.Multiselect = true; // 允许多选文件 ofd.Filter = "音乐文件|*.wav|全部文件|*.*"; // 筛选 ofd.ShowDialog(); // 启动 // 获得我们在文件夹中选择所有文件的全路径 string[] path = ofd.FileNames; for (int i = 0; i < path.Length; i++) { // 将选中的音乐全都添加到listBox控件中 this.lstMusic.Items.Add(Path.GetFileName(path[i])); // 保存音乐名 list.Add(path[i]); } } // 播放.wav后缀音乐的类 SoundPlayer player = new SoundPlayer(); private void lstMusic_DoubleClick(object sender, EventArgs e) { // 双击实现播放 player.SoundLocation = list[this.lstMusic.SelectedIndex]; // 将双击播放的音乐赋值 player.Load(); // 同步加载 player.Play(); // 开始播放 } // 停止 private void btnStop_Click(object sender, EventArgs e) { player.Stop(); // 音乐停止 } // 上一曲 private void btnMovePrevious_Click(object sender, EventArgs e) { int index = this.lstMusic.SelectedIndex; index--; if (index < 0) { index = this.lstMusic.Items.Count - 1; } this.lstMusic.SelectedIndex = index; player.SoundLocation = list[index]; player.Load(); player.Play(); } // 下一曲 private void btnMoveNext_Click(object sender, EventArgs e) { // 获得当前歌曲选中的索引 int index = this.lstMusic.SelectedIndex; index++; // 所有自增,指向下一个 // 检测是否越界 if (index == this.lstMusic.Items.Count) { index = 0; } // 将改变后的索引重新的赋值给我们当前选中的索引项 this.lstMusic.SelectedIndex = index; // 赋值播放 player.SoundLocation = list[index]; player.Load(); player.Play(); } }} 总结:

注意:SoundPlayer这个音乐类只适合.wav后缀的音乐!应该不算很难吧,这个小项目,适合所有初学WinForm的小伙伴们!

转载地址:http://jwvjz.baihongyu.com/

你可能感兴趣的文章

localhost:5000在MacOS V12(蒙特利)中不可用

查看>>

logstash mysql 准实时同步到 elasticsearch

查看>>

Luogu2973:[USACO10HOL]赶小猪

查看>>

mabatis 中出现< 以及> 代表什么意思?

查看>>

Mac book pro打开docker出现The data couldn’t be read because it is missing

查看>>

MAC M1大数据0-1成神篇-25 hadoop高可用搭建

查看>>

mac mysql 进程_Mac平台下启动MySQL到完全终止MySQL----终端八步走

查看>>

Mac OS 12.0.1 如何安装柯美287打印机驱动,刷卡打印

查看>>

MangoDB4.0版本的安装与配置

查看>>

Manjaro 24.1 “Xahea” 发布!具有 KDE Plasma 6.1.5、GNOME 46 和最新的内核增强功能

查看>>

mapping文件目录生成修改

查看>>

MapReduce程序依赖的jar包

查看>>

mariadb multi-source replication(mariadb多主复制)

查看>>

MariaDB的简单使用

查看>>

MaterialForm对tab页进行隐藏

查看>>

Member var and Static var.

查看>>

memcached高速缓存学习笔记001---memcached介绍和安装以及基本使用

查看>>

memcached高速缓存学习笔记003---利用JAVA程序操作memcached crud操作

查看>>

Memcached:Node.js 高性能缓存解决方案

查看>>

memcache、redis原理对比

查看>>